As part of NVIDIA, Mellanox Technologies is a leading supplier of end-to-end Ethernet and InfiniBand intelligent interconnect solutions and services for servers, storage, and hyper-converged infrastructure.

Mellanox intelligent interconnect solutions increase data center efficiency by providing the highest throughput and lowest latency, delivering data faster to applications and unlocking system performance.

Mellanox offers a choice of high performance solutions: network and multicore processors, network adapters, switches, cables, software and silicon, that accelerate application runtime and maximize business results for a wide range of markets including high performance computing, enterprise data centers, Web 2.0, cloud, storage, network security, telecom and financial services.

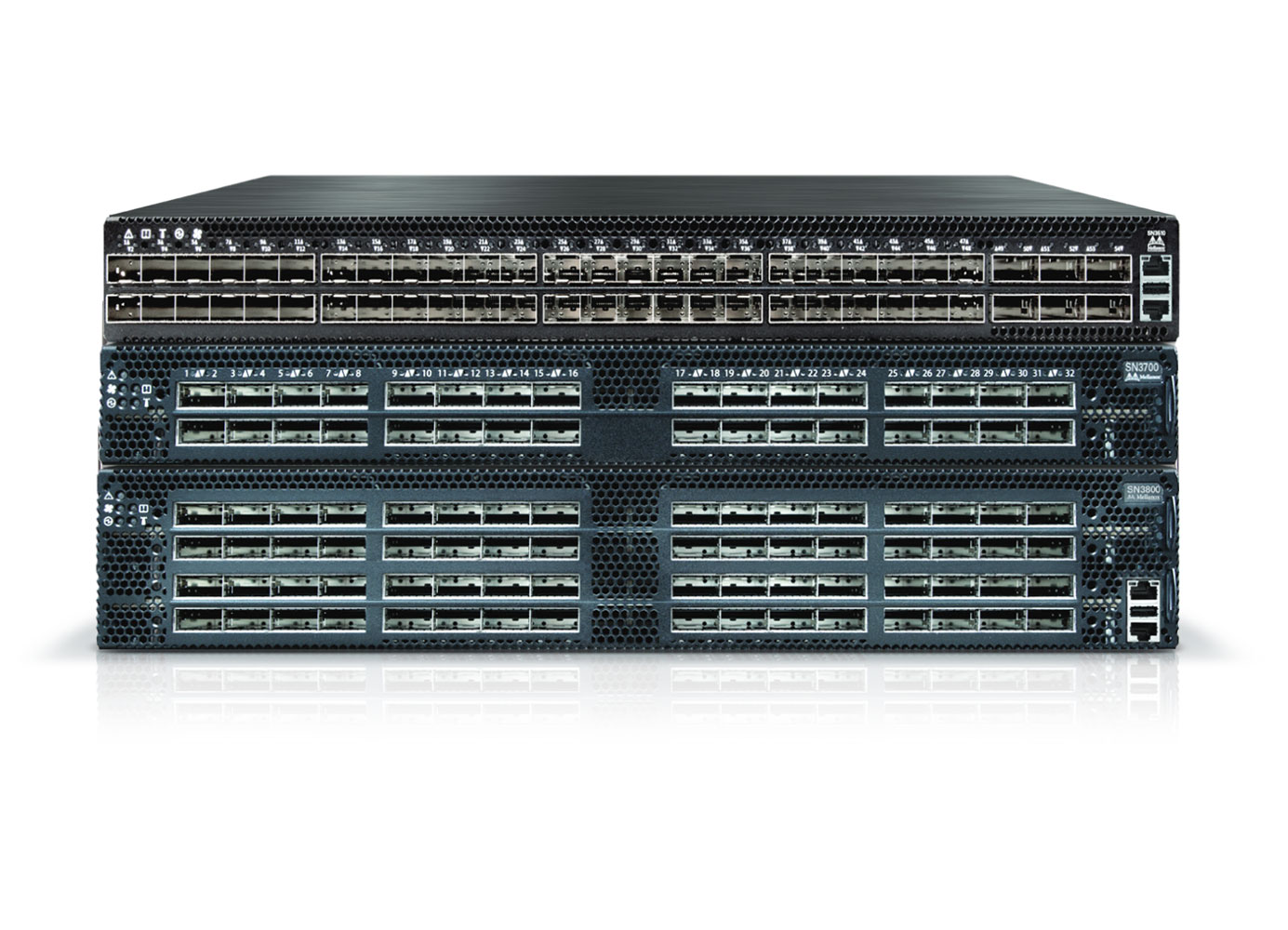

| The Mellanox Spectrum® Ethernet Switch product family includes a broad portfolio of Top-of-Rack and aggregation switches. Spectrum switches come in flexible form-factors with 16 to 128 physical ports supporting 1GbE through 400GbE. Based on ground-breaking silicon technology that is optimized for performance and scale, Spectrum switches are ideal to build high performance, cost-effective and efficient Cloud Data Center Networks, Ethernet Storage Fabric, and Deep Learning Interconnects. |

|

Mellanox LinkX cables and transceivers make 100Gb/s deployments as easy and as universal as 10Gb/s links.

|

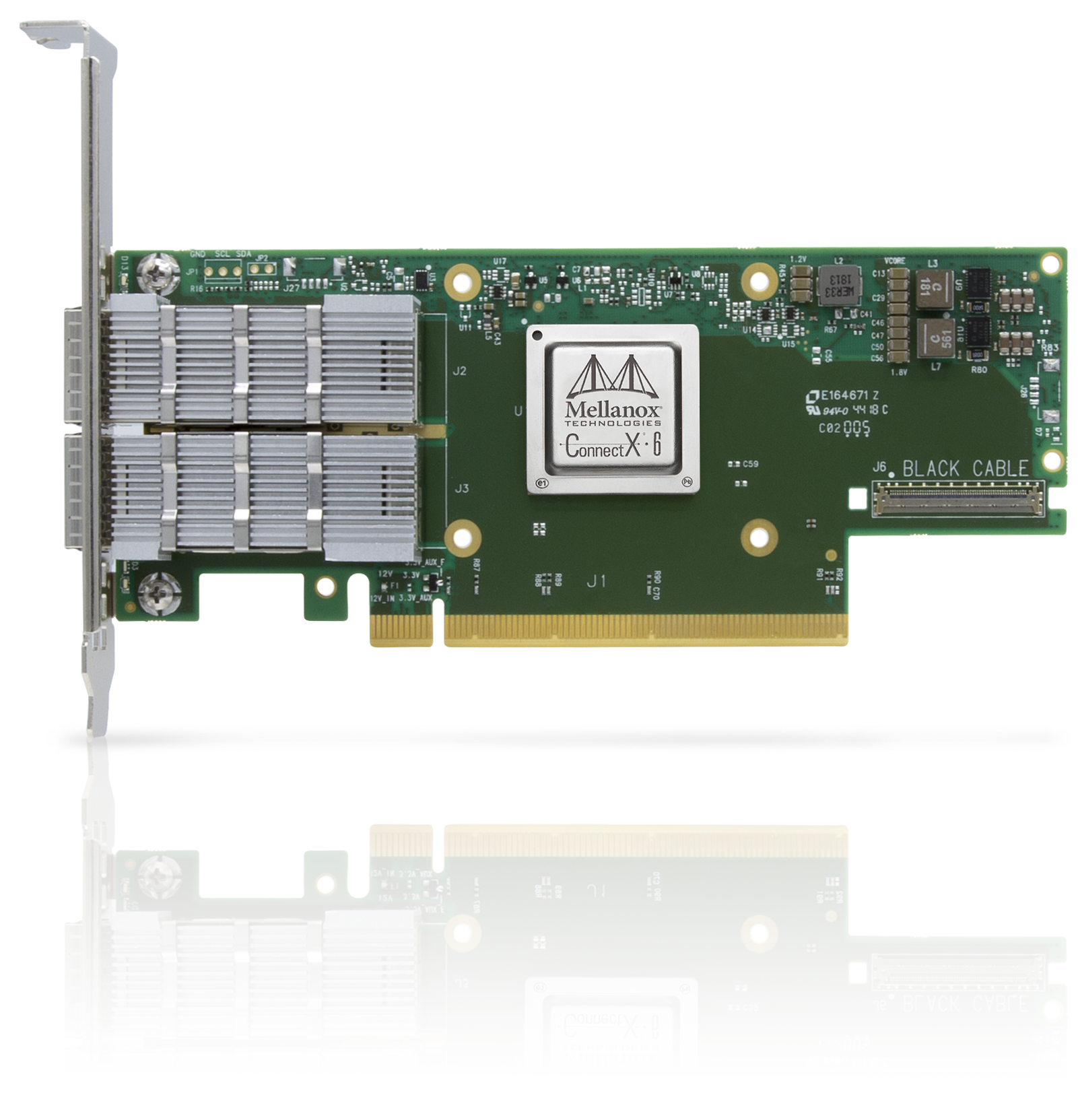

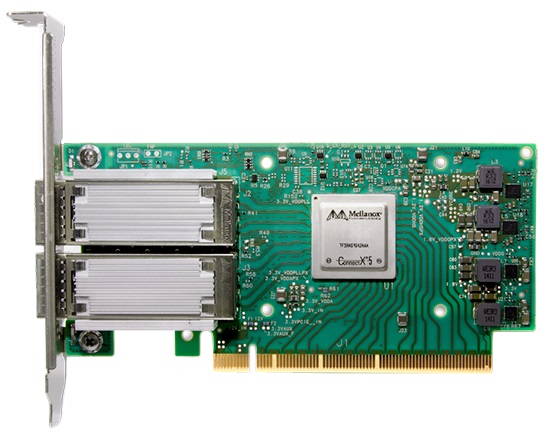

| Leveraging faster speeds and innovative In-Network Computing, Mellanox InfiniBand smart adapters achieve extreme performance and scale, lowering cost per operation and increasing ROI for high performance computing, machine learning, advanced storage, clustered databases, low-latency embedded I/O applications, and more. |

| Mellanox's family of InfiniBand switches deliver the highest performance and port density with complete fabric management solutions to enable compute clusters and converged data centers to operate at any scale while reducing operational costs and infrastructure complexity. |

|  |

| Mellanox provides a series of tools to properly configure a cluster based on your choice of interconnect (InfiniBand or Ethernet). The configuration tools are provide a mechanism to receive complete cluster configurations and full topology reports with recommended OEM-specific product part numbers (SKU's). |